Fawkes: Protecting Personal Privacy against Unauthorized Deep Learning Models.

Shawn Shan, Emily Wenger, Jiayun Zhang, Huiying Li, Haitao Zheng, and Ben Y. Zhao.

In Proceedings of USENIX Security Symposium 2020. ( Download PDF here )

Fawkes Source Code on Github, for development.

2020 is a watershed year for machine learning. It has seen the true arrival of commodized machine learning, where deep learning models and algorithms are readily available to Internet users. GPUs are cheaper and more readily available than ever, and new training methods like transfer learning have made it possible to train powerful deep learning models using smaller sets of data.

But accessible machine learning also has its downsides. A recent New York Times article by Kashmir Hill profiled clearview.ai, an unregulated facial recognition service that has downloaded over 3 billion photos of people from the Internet and social media and used them to build facial recognition models for millions of citizens without their knowledge or permission. Clearview.ai demonstrates just how easy it is to build invasive tools for monitoring and tracking using deep learning.

So how do we protect ourselves against unauthorized third parties building facial recognition models that recognize us wherever we may go? Regulations can and will help restrict the use of machine learning by public companies but will have negligible impact on private organizations, individuals, or even other nation states with similar goals.

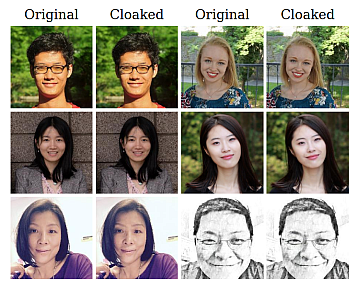

The SAND Lab at University of Chicago has developed Fawkes1, an algorithm and software tool (running locally on your computer) that gives individuals the ability to limit how their own images can be used to track them. At a high level, Fawkes takes your personal images and makes tiny, pixel-level changes that are invisible to the human eye, in a process we call image cloaking. You can then use these «cloaked» photos as you normally would, sharing them on social media, sending them to friends, printing them or displaying them on digital devices, the same way you would any other photo. The difference, however, is that if and when someone tries to use these photos to build a facial recognition model, «cloaked» images will teach the model an highly distorted version of what makes you look like you. The cloak effect is not easily detectable by humans or machines and will not cause errors in model training. However, when someone tries to identify you by presenting an unaltered, «uncloaked» image of you (e.g. a photo taken in public) to the model, the model will fail to recognize you.

Fawkes has been tested extensively and proven effective in a variety of environments and is 100% effective against state-of-the-art facial recognition models (Microsoft Azure Face API, Amazon Rekognition, and Face++). We are in the process of adding more material here to explain how and why Fawkes works. For now, please see the link below to our technical paper, which will be presented at the upcoming USENIX Security Symposium, to be held on August 12 to 14.

The Fawkes project is led by two PhD students at SAND Lab, Emily Wenger and Shawn Shan, with important contributions from Jiayun Zhang (SAND Lab visitor and current PhD student at UC San Diego) and Huiying Li, also a SAND Lab PhD student. The faculty advisors are SAND Lab co-directors and Neubauer Professors Ben Zhao and Heather Zheng.

1The Guy Fawkes mask, a la V for Vendetta.

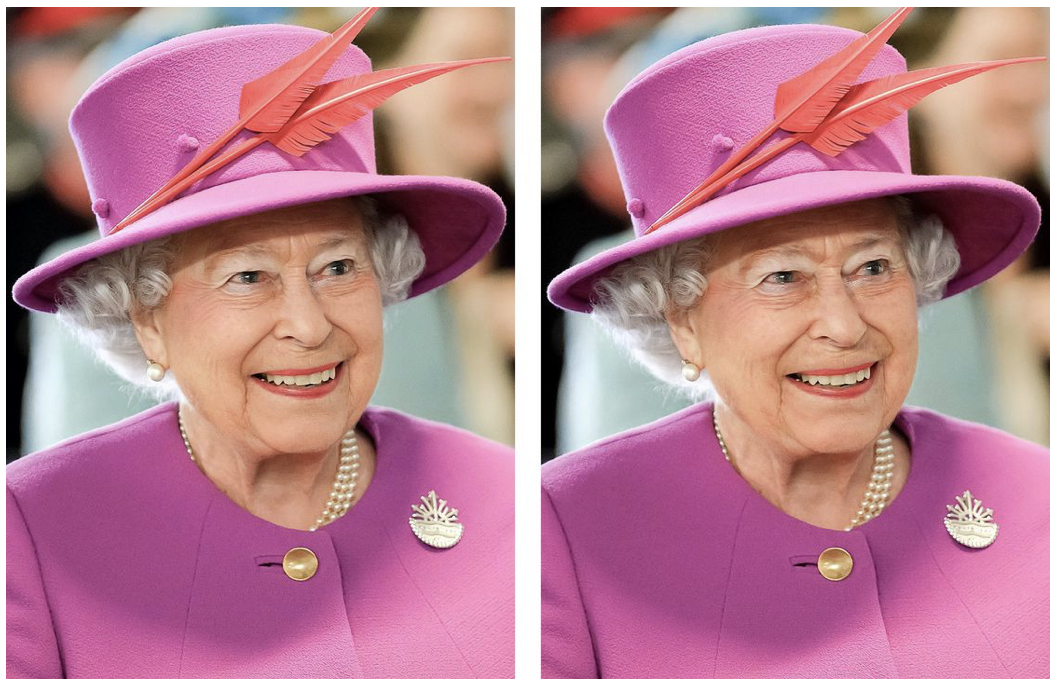

In addition to the photos of the team cloaked above, here are a couple more examples of cloaked images and their originals. Can you tell which is the original? (Cloaked image of the Queen courtesy of TheVerge).

|

|

|