It’s no secret that training artificial intelligence algorithms requires insane amounts of energy — but, as a new paper reveals, it also uses up an absurd amount of water, too.

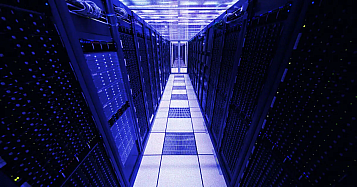

Researchers from the University of California Riverside and the University of Texas Arlington have shared a yet-to-be-peer-reviewed paper titled «Making AI Less Thirsty» that looks into the environmental impact of AI training, which not only needs copious of electricity but also tons of water to cool the data centers.

When looking into how much water is needed to cool the data processing centers employed by companies like OpenAI and Google, the researchers found that just in training GPT-3 alone, Microsoft, which is partnered with OpenAI, consumed a whopping 185,000 gallons of water — which is, per their calculations, equivalent to the amount of water needed to cool a nuclear reactor.

As the paper notes, the water Microsoft used to cool its US-based data centers while training GPT-3 was enough to produce «370 BMW cars or 320 Tesla electric vehicles.» If they’d trained the model in the company’s data centers in Asia, which are even larger, «these numbers would have been tripled.»

Bottle It Up

What’s more: «ChatGPT needs to 'drink’ [the equivalent of] a 500 ml bottle of water for a simple conversation of roughly 20–50 questions and answers, » the paper notes. «While a 500ml bottle of water might not seem too much, the total combined water footprint for inference is still extremely large, considering ChatGPT’s billions of users.»

When it comes to suggestions for what to do about this glaring issue in the face of repeated warnings of water shortages, the researchers don’t have all that much in the way of advice.

At the very least, companies like Google and OpenAI «can, and also should, take social responsibility and lead by example by addressing their own water footprint, » the researchers write — a first step in quenching AI’s unslakable «thirst.»

Image: Getty Images / Futurism